David Weston is Chief Executive of the Teacher Development Trust and Chair of the Department for Education (England) Teachers’ Professional Development Expert Group. He is a governor and former teacher and was a founding Director of the Chartered College of Teaching.

The Education Endowment Foundation has published an evaluation of a trial which includes Lesson Study.

Because of confirmation bias, all humans have a tendency to wave through studies that confirm our existing views, but carefully scrutinise and pick apart ones that don’t. Given that my organisation has a history of pointing out Lesson Study as a plausible mechanism of CPD then we absolutely have to hold our hands up to having looked at this one pretty carefully, because, as EEF puts it, “The project found no evidence that this version of Lesson Study improves maths and reading attainment at KS2“, even though they also note that “This result does not show that all activities related to Lesson Study are ineffective”

Before looking in detail, I want to welcome EEF investing in this trial. Despite the increasing volume of evidence, we still need more research about what works.

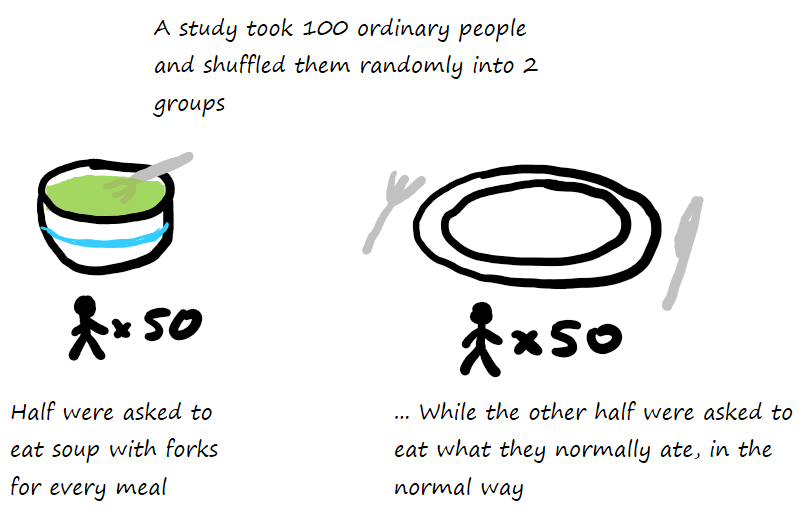

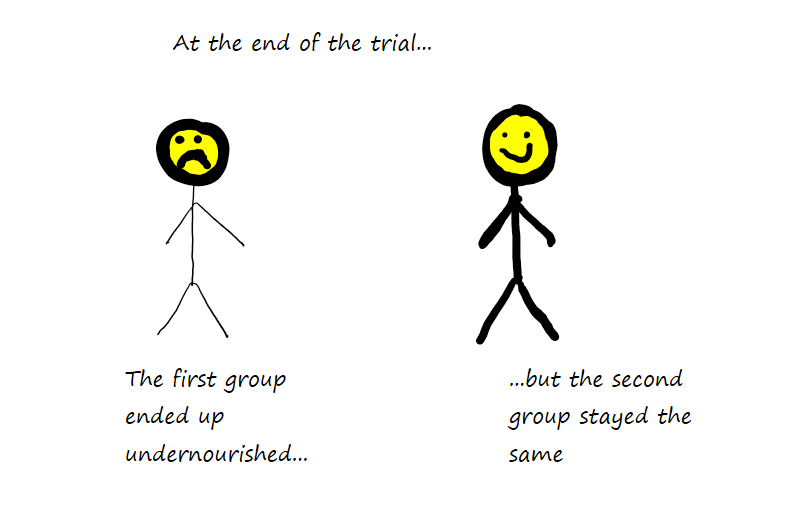

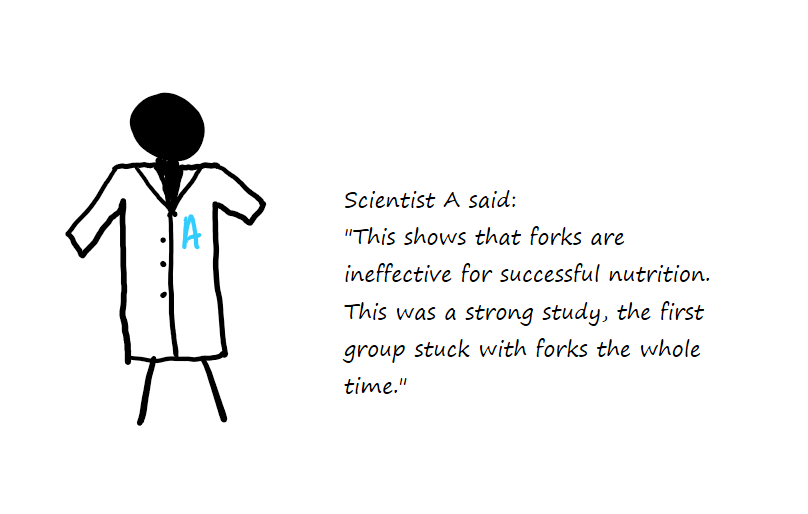

Studying the effectiveness of CPD mechanisms is fiendishly hard. Whatever result you get, it’s almost impossible to know whether it was due to the CPD mechanism, or due to the pedagogical content, or due to the interaction between them. To try and explain this, here’s a silly analogy.

In my very silly and badly drawn example – I am absolutely no Oliver Caviglioli – you can see the issue. When testing a transmission mechanism, the type of content being used matters a lot – not just the quality in itself but also whether that content is suited to the transmission mechanism.

So, we need to look at three things in this study:

- Was the content effective or ineffective?

- Was the mechanism effective or ineffective, by itself or in combination with the content?

- Did the control group do some of the things that the intervention group did?

Let’s look again at the EEF study. In the summary they say that “For this project, Lesson Study was used to deliver Talk for Literacy and Talk for Numeracy interventions“. This is important. We need to look at whether, plausibly, we can determine whether these interventions are likely to be effective at improving SATs scores – the outcomes measured in this trial.

Let’s start with Talk for Literacy. The EEF pilot of this says that it has no statistically significant impact on overall reading (albeit they use the phrase ‘nearly significant’). Talk for Learning has not been evaluated. Digging a bit further into the study itself, it sometimes talks about Talk for Literacy, sometimes Talk for Numeracy, sometimes about another approach it calls ‘Talk for Learning’ and occasionally talks about a general mix of metacognition and feedback pedagogical advice along with some around maths and literacy. It’s not clear whether the team from Edge Hill used a previously validated approach or created a new, untested mix.

Depending on the detail, we can either say that the pedagogical content is either wholly untested or it is a mix of already-shown-to-be-non-significant plus some untested content, or even it might be based on EEF metacognition and feedback advice. Essentially, we can’t pin down whether the pedagogical content was likely to be effective or not. At this stage, the only question we can really ask is: is Lesson Study effective in isolation, regardless of content?

Fortunately the background evidence section tells us more.

This EEF trial of the Lesson Study programme set out to robustly evaluate this intervention and is one of the largest randomised trials to date focusing on the effectiveness of this form of teacher

development. Previous papers either used quasi-experimental methods (Taylor and Tyler, 2012) or were based on smaller samples (Lewis and Perry, 2015). Lewis and Perry implemented the only other

RCT of Lesson Study involving 213 teachers (equivalent to 71 tripods) in 39 groups over an 80–91-day period. Using a three branch trial with control, Lesson Study, and Lesson Study plus additional maths materials, they found positive significant impacts on self-reported ‘Collegial Learning Effectiveness’ and ‘Expectations for Student Achievement’ when Lesson Study is combined with the maths materials. This group also saw improvements in the students’ understanding of fractions, which was the focus of this Lesson Study trial. There were no improvements when Lesson Study was used in isolation.“

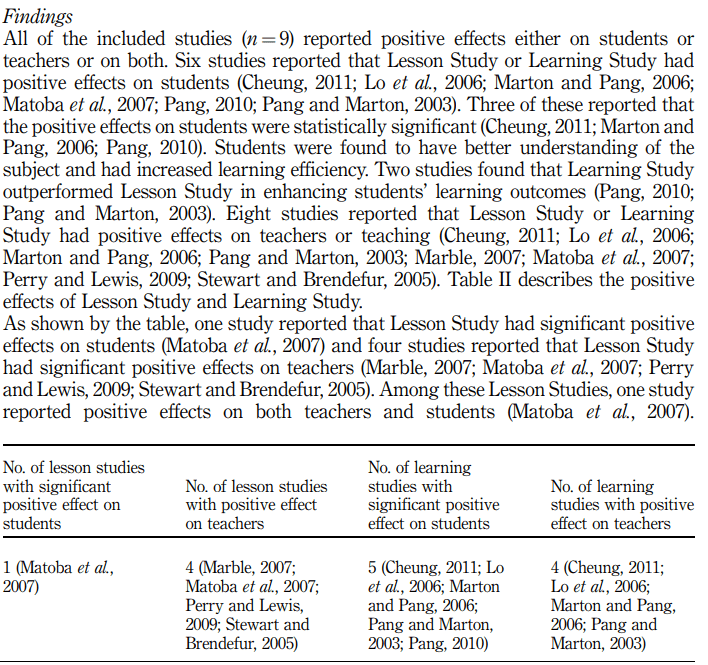

We can also look at other evidence on Lesson Study. This excerpt is from Cheung and Wong (2014) – a systematic review on the effects of Lesson Study.

Since that review, I’m also aware of studies including Lewis and Perry (2015) and Lewis and Perry (2017) which both show statistically significant, positive results using a Lesson Study approach with some very specific interventions in mathematics. That said, I don’t know of a more recent systematic review of Lesson Study, so there is a danger that those last two are cherry-picked. I will also admit that I haven’t explored the methodology of those papers in as much detail to see if they suffer any of the same issues as this EEF trial.

So, there are a number of trials, albeit much smaller than this EEF trial, which suggest that a Lesson Study approach can have significant, positive impact on student outcomes, but only when the content is of high quality. We already had a strong sense that Lesson Study is unlikely to work where pedagogical content is not effective.

Finally, we also need to look at the control group. Did they abstain from both Lesson Study and ‘Talk for Learning’ type approaches? The study found that “There is evidence that some control schools implemented similar approaches to Lesson Study, such as teacher observation” and that 4 of 11 control schools sampled had implemented Lesson Study. It also found that “Talk for Learning was not seen to have an influence on its own, particularly since schools were already using a version of this already”.

What can we therefore conclude from this EEF trial?

There are some possible options.

- If we decided to ignore the above and assume that the pedagogical content was effective, then either:

- Lesson Study is an ineffective mechanism in all cases, or

- it was an ineffective mechanism in this particular case

- If we were determined to conclude that Lesson Study is always effective (which is also not plausible), then we would conclude:

- This implementation is flawed, or

- This pedagogical content is definitely bad.

My suggestion would be that none of the above conclusions are supported, in my view, by any reasonable reading of this study and the wider evidence base. We also need to question the extent to which we can draw any strong conclusions from a study where so many in the control group appeared to be engaging in similar practice.

We can probably be clearer that Lesson Study is no panacea: using this process is no guarantee of good outcomes. That’s no huge surprise – the Developing Great Teaching systematic review suggests that any CPD mechanism is insufficient unless the pedagogical content is effective and that the whole process is supported with effective facilitation, expert input and a supportive leadership and culture. This study should give additional caution to anyone who suggests that adding a Lesson Study mechanism to CPD will be sufficient in itself.

In addition, we would also caution about the increasingly loose use of the phrase ‘lesson study’ which we’ve seen applied to many processes that are very dissimilar to each other. Even in Japan, they use the phrase for different approaches. We are gradually using the phrase Collaborative Lesson research instead, to try and deal with this.

From a TDT perspective, we will continue to use Lesson Study (or Collaborative Lesson Research) as a very plausibly effective CPD mechanism, while continuing to look at any new findings from research into it. We have always said that to make Lesson Study work successfully, a school needs:

- High quality expert input around subject content, pedagogy (general and content-specific) and the Lesson Study process itself

- Senior leadership support, making time, removing competing priorities and ensuring that there is a culture of trust and supportive engagement with research

- Strong engagement with evidence and high quality assessment approaches, both in formulating the focus of the Lesson Study and in the choosing and refining of the intervention.

We would like to see several further studies conducted based on pedagogical content which has been strongly validated by research, using multiple CPD mechanisms, over a large number of schools. This would be a more effective way of testing the suitability and effectiveness of different CPD approaches for helping teachers to develop in different areas.

Finally, we will continue to challenge over-enthusiasm about Lesson Study as a panacea as much as we will also challenge anyone who claims that this recent EEF study is the death knell for the process. We cannot afford to waste precious CPD time with unevidenced approaches, nor can we afford to drop a plausibly effective one due to the findings from one single trial which has significant questions hanging over it. TDT will continue to base our work around systematic reviews of evidence.

P.S. I’ve done my best not to misrepresent anything here, but acknowledging my inevitable confirmation bias, please do let me know if you think there’s anything in this blog that doesn’t appear balanced – you can comment or email me: david.weston@tdtrust.org