What’s going wrong? Focusing on Mathematical Knowledge: The Impact of Content-Intensive Teacher Professional Development

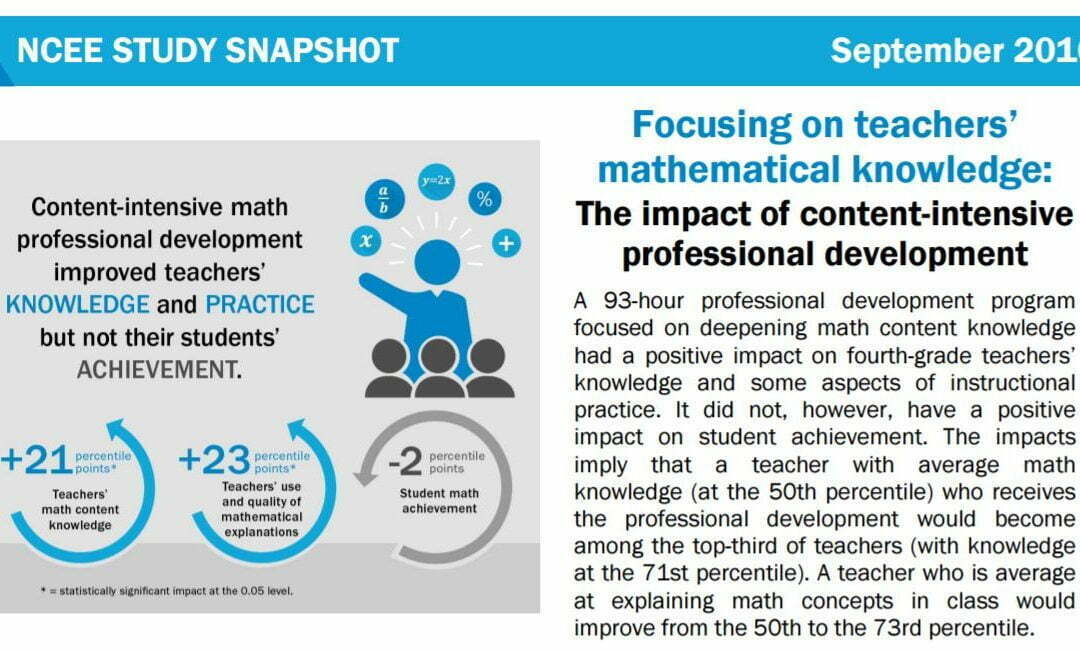

In September, a rather surprising study was released by the US Institute of Education Sciences. ‘Focusing on teachers’ mathematical knowledge: The impact of content-intensive professional development’ took a sample of 165 fourth grade (Year 5) teachers in 74 schools across the US, assessed their instructional practice, as well as student outcomes, and finally concluded that intensive CPD “improved teachers’ knowledge and some aspects of classroom practice but did not improve student achievement”.

At the Teacher Development Trust, we’re raising awareness of the importance of professional development and building tools to help teachers improve professionally in order to achieve success for all young people. Yet this IES study stands at odds with previous research that proves how engagement with continual professional learning can be transformative both to teachers’ practice but also to pupil attainment. In order to explore this further, TDT consulted the expert insights of Philippa Cordingley (CUREE) and Professor Daniel Muijs (University of Southampton) on the implications of this study and what we should take from it going forwards.

Philippa Cordingley, @PhilippaCcuree

“This was a resource intensive CPD programme with a very specific focus on enhancing teachers’ mathematical understanding to enrich mathematics teaching. Lasting over 93 hours in total it involved an intense, 80-hour summer (holiday?) workshop and follow up activities comprising five, two-hour collaborative Mathematics Learning Community meetings focused on analysing student work and three, hour long video feedback sessions focused on reinforcing maths content. Although it enhanced teachers’ subject knowledge it had a small negative impact on pupils’ learning. Why?

There’s not much detail about the CPD, but the programme seems to depart significantly from the characteristics of effective Continuing Professional Development and Learning (CPDL) identified in the systematic “Developing Great Teaching” review, Cordingley et al 2015. Here are some relating to application and planning:

- The 80-hour workshop was focused on immersion in developing mathematical knowledge and took place over the summer when it would have been difficult for teachers to use their new knowledge for planning specifically for their classes– they hadn’t even met them yet.

- The Mathematic Learning Communities, were designed to support enactment in the classroom but the focus was reviewing student work not, apparently, planning for the future. Where are the iterative cycles of “plan, do and review” based learning?

- The focus on analysing “the richness, coherence, and depth of teachers’ mathematics instruction; their capacity to promote student participation and the precision and clarity of their mathematical language” in the mathematics communities also seems to be more about the teaching than the learning; where, for example, is the detail about the goals for students?

- Similarly, although use of video can be a powerful professional learning tool, its use here seems to be focussed on reinforcing the content rather than understanding how the content and the learning interact; it reads as though the impact on pupils was secondary to efforts to spot teachers using their new knowledge.

I am not sufficiently familiar with teaching in America to be sure about this interpretation. It may well be that a richer description of the CPD process would answer some of these questions. But based on the detail here this looks unfortunately like CPD done to teachers rather than CPDL done by them. Deep content knowledge matters – but its application to making a difference for learners needs to be embedded throughout!”

Professor Daniel Muijs, @ProfDanielMuijs

“This is an interesting study on the implementation of a PD initiative focussed on mathematical content knowledge. The study used a within-school RCT design, and in terms of changes to content knowledge, was successful. This was, however, only partly the case for teacher classroom practices, while no significant impact was found on student outcomes. The theory of change, that improved content knowledge will positively affect teachers’ classroom practice, which will in turn lead to enhanced pupil attainment, is a reasonable one, and it is therefore surprising that no impact on pupil attainment was found, especially as the tests were aligned to the content taught in the PD initiative. Random assignment within schools appears to have worked well enough, and there are no major problems with the sample. Implementation fidelity appears good. So what could explain the no-effect findings for attainment? There are a number of possibilities:

- The timeframe may not be sufficient. It is possible that the impact of improved subject knowledge requires additional time to affect practices and subsequently attainment. However, a year is not an insignificant amount of time, and the intervention was quite intensive.

- The attainment measures used may be misaligned to the intervention, or lack sensitivity. The former appears not to be the case, as the standardised NWEA test was adapted to the content of the intervention (it may be the case for the state tests, however). The latter appears unlikely for the NWEA test, which shows sufficient scope for variance at the top end in this sample.

- Teacher subject knowledge in this sample may already have been sufficient for the effective teaching of grade 8 Maths. This is possible, as the relationship between subject knowledge and attainment is curvilinear, in that there is a diminishing return from increased subject knowledge once a sufficient level has been reached.

- The key may lie in the lack of relationship of the dimensions of teacher practice measured using the MQI and pupil attainment, which would then explain why the PD programme, the instructional component of which focussed very much on the MQI dimensions, did not have an impact. This may result from the instructional approaches measured in MQI, which tend to take a very pupil-centred approach which is not well correlated with attainment outcomes.”

We’d love to hear your reflections and opinions on the NCEE report – so please do share your ideas in the comments below or tweet us @TeacherDevTrust.

To view the full ‘Developing Great Teaching’ review, whose key finding was that professional development opportunities that are carefully designed and have a strong focus on pupil outcomes do have a significant impact on student achievement, visit: https://tdtrust.org/about/dgt.